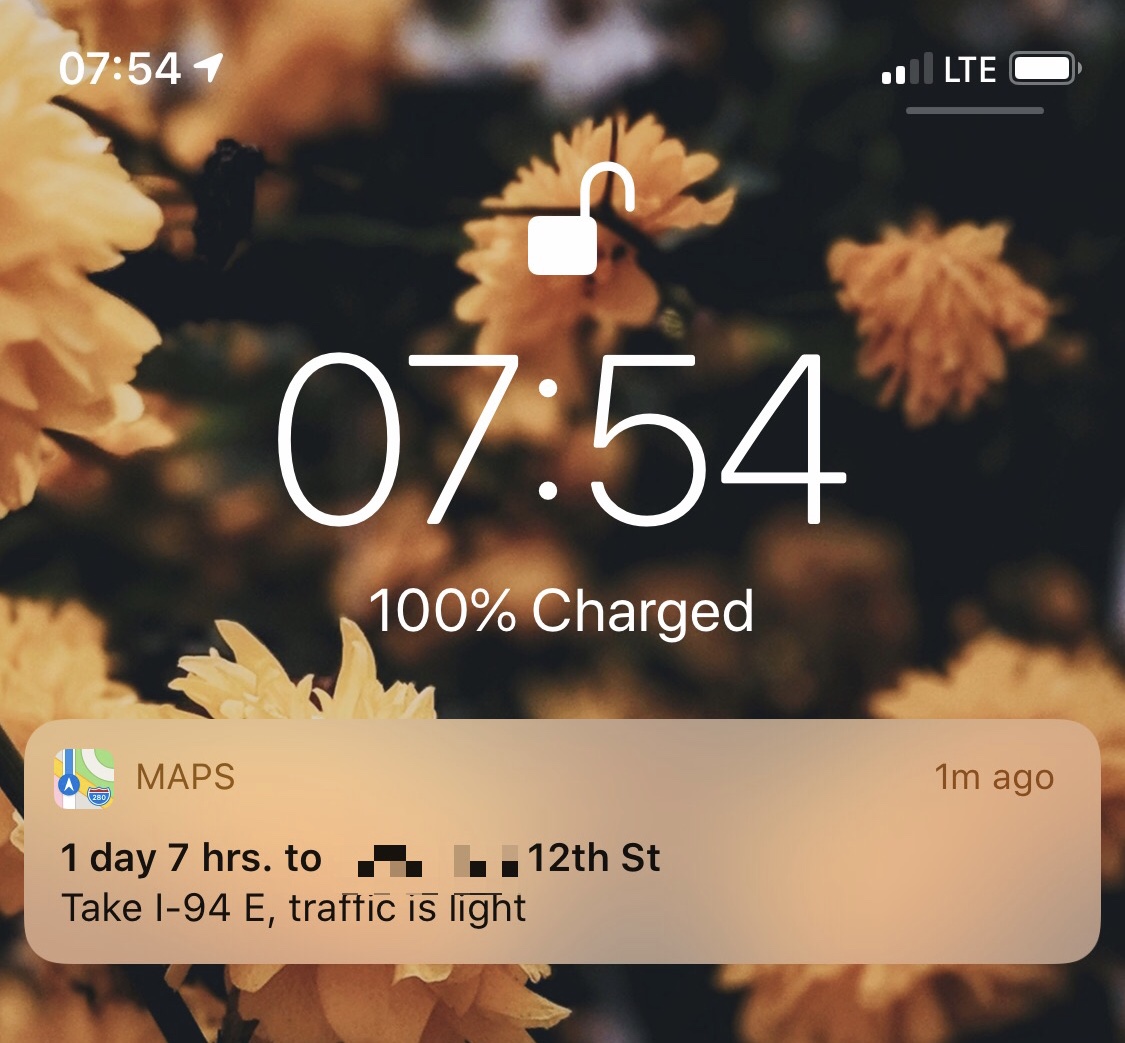

A recent cold snap seems to have increased my propensity to experience bugs. I’m usually a walking commuter to my day job, but I’ve happily accepted a lift from my partner all week long as temperatures dropped below the ‑30° C mark every morning. As I got into the car this morning, I noticed a strange notification on my lock screen:

This appears to be a Siri suggestion — a nudge by the system to show a hopefully-useful shortcut to a common task. As Apple puts it:

As Siri learns your routines, you get suggestions for just what you need, at just the right time. For example, if you frequently order coffee mid morning, Siri may suggest your order near the time you normally place it.

Since I go to work at a similar time every day, it tells me how long my commute will take and gives me the option to get directions. Nice, right?

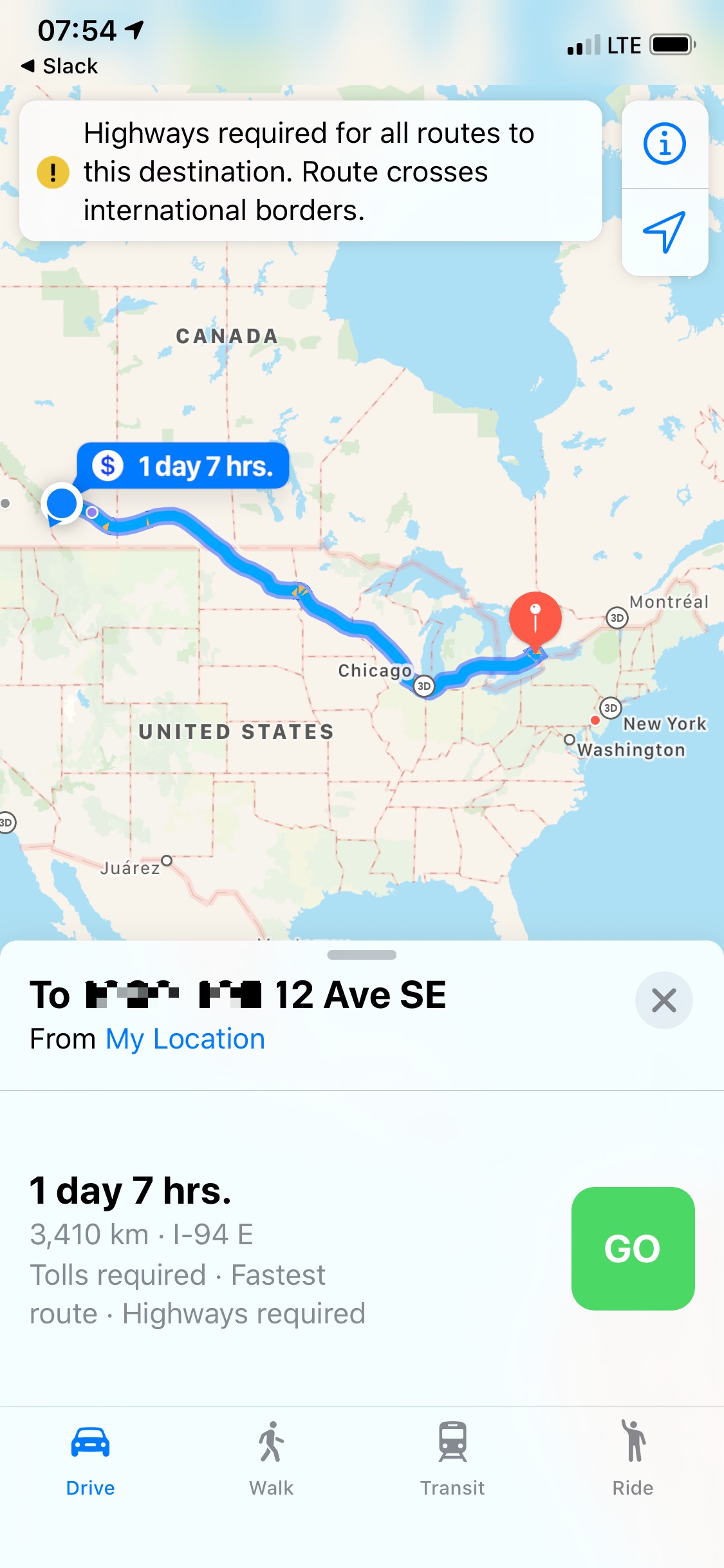

Except something is plainly not right: it’s going to take me over a day to get to work? Here’s the route it thinks I should take:

I found this hilarious — obviously — but also fascinating. How did it get this so wrong?

My assumption was that my phone knew that I commuted to work daily, so it figured out the address of my office. And then, somehow, it got confused between the location it knows and the transcribed address it has stored, and then associated that with an address in or near Rochester, New York. But that doesn’t seem right.

Then, I thought that perhaps the details in my contact card were wrong. My work address is stored in there, and Siri mines that card for information. But there’s a full address in that card including country and postal code, so I’m not sure it could get it so wrong.

I think the third option is most likely: I have my work hours as a calendar appointment every day, and the address only includes the unit and street name, not my city, country, or postal code. I guess Apple Maps’ search engine must have searched globally for that address and ended up in upstate New York.

But why? Why would it think that an appointment in my calendar is likely to be anywhere other than near where I live, particularly when it’s recurring? Why doesn’t Apple Maps’ search engine or Siri — I don’t know which is responsible in this circumstance — prioritize nearby locations? Why doesn’t it prioritize frequent locations?

If you look closely, you’ll also notice another discrepancy: the notification says that it’s going to give me directions to “12th St”, but the directions in Maps are to “12 Ave SE”. Why would this discrepancy exist?

It’s not just the bug — or, more likely, the cascading series of bugs — that fascinates me, nor the fact that it’s so wrong. It’s this era of mystery box machine learning, where sometimes its results look like magic and, at other times, the results are incomprehensible. Every time some lengthy IF-ELSE chain helpfully suggests me driving directions for going across the continent or thinks I only ever message myself, my confidence is immediately erased in my phone’s ability to do basic tasks. How can I trust it when it makes such blatant mistakes, especially when there’s no way to tell it that it’s wrong?